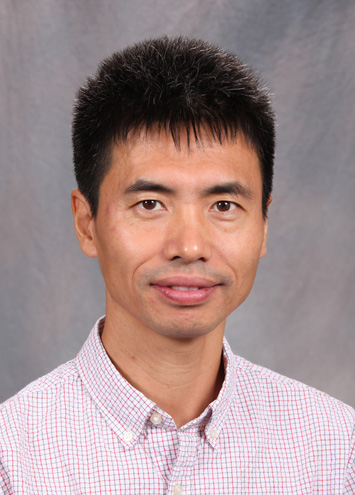

- Professor

- Bilkent University

Erdal Arıkan

- Title: Polar Coding for Tb/s Applications

- Abstract: The talk will discuss polar codes for Tb/s applications where implementations constraints such as energy efficiency and area efficiency become primary design constraints.

-

Bio:

Erdal Arikan (IEEE Fellow) received the B.S. degree in electrical engineering from the California Institute of Technology, Pasadena, CA, USA, in 1981, and the M.S. and Ph.D. degrees in electrical engineering from the Massachusetts Institute of Technology, Cambridge, MA, USA, in 1982 and 1985, respectively. Since 1987, he has been with the Electrical-Electronics Engineering Department, Bilkent University, Ankara, Turkey, where he is a Professor. He is also the Founder of Polaran Ltd, a company specializing in polar coding products. He was a recipient of the 2010 IEEE Information Theory Society Paper Award, the 2013 IEEE W.R.G. Baker Award, the 2018 IEEE Hamming Medal, and the 2019 Claude E. Shannon Award.

- Personal Page